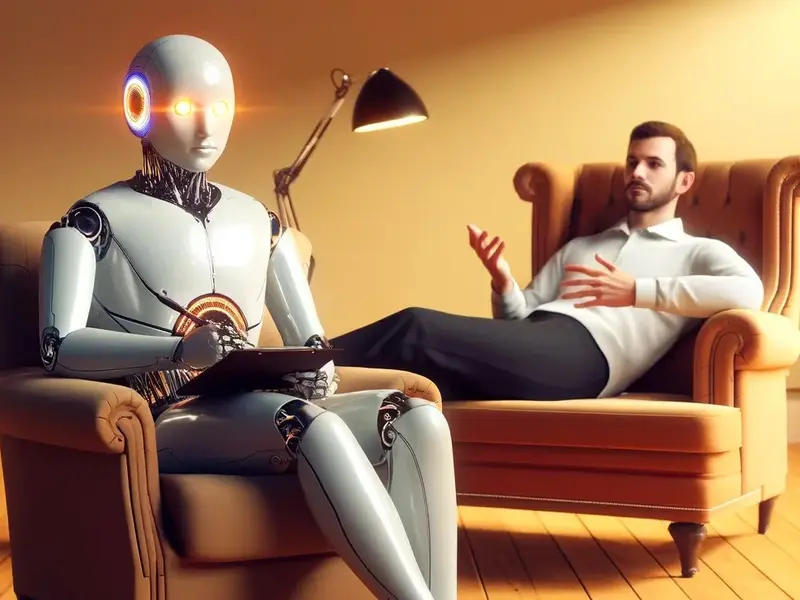

Artificial intelligence is increasingly being integrated into mental health support through chatbots and virtual assistants, often referred to as AI therapists. These tools are designed to offer basic emotional guidance, coping strategies, and structured exercises. While they do not replace licensed therapists, their use is expanding across global mental health services.

A 2023 report from the World Health Organization (WHO) highlights that digital mental health tools can improve access to care, particularly in regions with limited mental health resources. However, the report also underscores potential risks, such as threats to data privacy and the absence of human judgment in sensitive situations.

Advantages of AI Mental Health Tools

- 24/7 Accessibility: Unlike traditional therapy, AI chatbots are available around the clock, providing immediate support when human professionals are not accessible.

- Reduced Stigma: The anonymity offered by chatbots can make it easier for individuals to discuss personal struggles. Research published in JMIR Mental Health indicates that many young users feel more comfortable sharing stressors with AI than with adults.

- Personalized Guidance: Platforms like Woebot and Wysa employ machine learning to tailor their responses to individual users over time. Many incorporate cognitive behavioral therapy (CBT) techniques to help users reframe negative thought patterns.

Limitations and Risks

- Limited Human Understanding: AI systems can overlook emotional subtleties or cultural nuances. A 2022 study in Frontiers in Psychology found that some users perceived chatbot responses as generic or misaligned with their experiences.

- Inadequate for Crises: AI cannot manage emergencies, such as suicidal ideation. While developers often include guidance directing users to hotlines, delays or errors could have serious consequences.

- Privacy and Data Security Concerns: Sensitive information shared through apps may be vulnerable. The American Psychiatric Association has advocated for stricter regulations to protect users’ data.

- Cultural Bias: Many AI models are primarily trained on English-language data, which can limit their ability to meet the needs of diverse populations.

AI as a Complement, Not a Replacement

Experts generally agree that AI should support rather than replace human therapists. AI can broaden access to care, encourage help-seeking behavior, and provide coping strategies, but decisions about diagnosis and treatment must remain with trained professionals.

Regulators and mental health organizations emphasize the importance of clear standards regarding how these tools are marketed and how user information is stored. WHO advises that AI should enhance human-led care, offering more entry points to mental health resources while keeping professional therapists in the central role.